Ensuring Accurate Polls in GE16

2016.01.06RED C goes to great lengths to ensure the most accurate polling possible for our clients. Everything we do is focused on providing an unbiased and accurate picture of voter intention and behaviour – after all this is what we are judged on.

Sometimes the methods we use are misunderstood and misinterpreted – so as the election is on the way, and lots of polls planned, here is another explanation of the steps we take and why we do them.

METHOD NOTE UPDATE – February 2016:

After a review of findings of the UK polling enquiry, RED C has reviewed our approach to ensure we take account of any possibility of similar errors in Irish polling. Two issues were uncovered in UK, the first being internet polls not providing enough older voters and the second being too many politically interested younger voters. The older voter issue does not exist in Ireland. The key then is to ensure the younger people in our poll are representative and that we accurately predict their turnout. Having evaluated the voter profile of our likely voters vs. the two general election exit polls and post-election studies, we have made the decision to be more stringent in our likely voter analysis as this provides a profile more in line with those that have voted in the past. From today we will therefore move to only including those that say that they are very likely to vote (8-10) rather than likely to vote (4-10) used previously.

Why do you poll by phone?

We use a telephone approach to polling because we believe it provides a more accurate means of reaching the electorate. When our interviewers call voters from the call centre, they have no idea who or where they are calling – it could be a mansion or a council flat in any area of the country – this means they cant choose certain types of people who may be easier to interview to approach to take part.

How do you get the numbers?

To generate the numbers we call we use a process called random digit dialling. This takes a representative set of numbers pulled from directories, and then randomly changes the last digit to create a fully random list of phone numbers. None of the original directory numbers are used, just the random ones. This means our telephone sample covers everyone – whether they are in any directory or not. Most of our calls (up to 70% for any one poll) are now conducting to randomly generated mobile phone numbers, with the rest to landline phones, so we cover off every possible type of voter whether they have a landline only, a mobile only or both.

How do you encourage response?

Its important that we try and encourage the people we ring to respond to the poll, so we can get as representative a sample as possible. If someone can’t do the poll when we first call, we ask if at all possible we can contact them again and make a date for us to call them back when convenient. We also try and encourage people to take part by reassuring them we are a legitimate independent polling company and explaining that their views are important to us.

How do you make sure the people you end up polling are representative?

As well as our random sampling and selection, we also set targets for different sub-groups of the population to match those in the population as a whole. These “quotas” are based on the most recent known demographic data from the CSO, including the Census. We set quotas on things like age, gender, class and region of the country. This means we ensure that, for example, our final sample has the same number of women 18+ as can be found in the country as a whole.

How do you ask the questions?

We believe the way that vote intention questions are asked in very important. Whenever we conduct a poll we ask four key questions about vote behaviour as standard – then we might also ask other questions about topics surrounding vote behaviour.

We first ask how likely people are to vote. This is important because we want to get the overall vote predictions as accurate as possible, and we know that only about 70% of the population tend to actually vote in General Elections. So we need to take account of those people in our sample who wont go and vote. Of course it is very difficult to 100% predict whether some one will vote or not, but we need to use some mechanism to take non-voters into account – otherwise we will be including the vote intention of those people who wont go to the polling booth, and this could bias our results. RED C ask people how likely they are to vote on a 10 point scale, where 1 is not at al likely and 10 very likely to vote. In our analysis for our final headline figures, we then remove those who say they are only 1-3 out of 10 likely to vote on this scale. It is of course quite possible that some of those who state they are 4-5 on this scale wont actually vote, but we have to cut off somewhere and this ensure we only remove those very likely not to vote.

We then ask who people are going to vote for? We ask them which party or independent candidate they think they would vote for if there was an election tomorrow. To be clear this is asked of everyone in our total sample of all adults aged 18+ – whether they are likely to vote or not. Then we probe further among those who have said an Independent candidate or other party in the initial questions, just to check they don’t mean one of the newer parties or groupings that have been formed in the last couple of years from the large group of Independent candidates.

Finally we ask how people voted in the last General Election. This is to help us gauge how accurate our sample is, and we use this later to help analyse the data. If our sample is accurate the claimed vote behaviour at the last election should of course quite closely reflect the actual vote in 2011 among those who were eligible to vote at that time.

We ask these questions before any other questions, such as attitudes to leaders or policies, as we believe to ask them the other way round may well influence the result of the vote intentions.

Does your poll just ask voting/election questions?

In order to provide a cost effective means of polling, our polls are normally run as part of our wider telephone omnibus service – The RED Express. An omnibus is a common approach for market research firms to allow a number of different clients to share the costs of reaching a large 1000 interview sample size. This means that after any poll questions for our published clients, we may also ask other questions for both political, media and business organisations. These questions will always be asked after our political opinion poll, and will never conflict with these questions. It means that if you are interviewed on our poll, you may well also be asked questions about particular topics of interest for media or PR purposes, or even for example about what grocery stores you visited that week. Anyone can “purchase” a question on our survey, although all questions are thoroughly vetted to be unbiased and fairly asked, and to ensure that they do not conflict with other questions on the survey. As a result we have asked questions for just about every political party, a range of Independent candidates, pressure groups, media companies and a plethora of major multinational companies such as Vodafone, SuperValu, Ryanair, Aviva etc.

What else do you do to the data?

Having asked everyone how likely they are to vote, how they will vote and how they voted at the last election we have a full data set to work with. Of course we could just publish these base or core figures, and we do supply this information in our reports. However, we believe there is some important extra analysis to conduct to ensure the best accuracy in our final results.

Firstly we check how good our sample is. The random selection and demographic quotas mean it should be pretty good. But we do a double check by looking at how those eligible to vote claimed they voted at the last General Election. Of course if our sample was 100% accurate reflection of all adults aged 18+ then how those who were eligible say they voted at the last election should match the actual result. Normally this is pretty much the case, but for any one poll it sometimes appears to be a little bit little biased one way or another. This could be because the people who agreed to take part in that poll are more likely to vote for one party or another, or that people don’t remember very well who they voted for and now remember voting for who they plan to vote for.

To take account of both of these possible explanations (representativeness and poor recall) we include another small weight on our sample. This ensures that the past vote answers, are halfway between what our sample claimed they voted and what we know the actual vote was at the last General Election. Generally this is a very small adjustment. For example if our sample states that 9% voted for Sinn Fein in 2011, but we know that actually 10% voted for Sinn Fein, we weight the past Sinn Fein voters to 9.5% of our total sample.

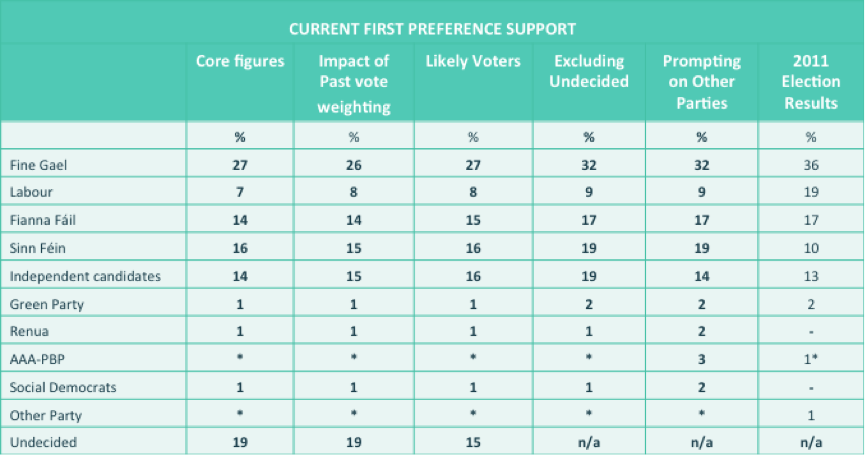

Looking at the table below from our last poll before Christmas you can see the impact this has is very minor on our core vote intention figures, with changes of at most 1% for each party and in most cases less than this. (the difference between column 1 core figures and column 2 past vote weighting)

Next we look at likelihood to vote. As mentioned earlier we remove those people who say they are very unlikely to vote from our published figures – in order to take account of likely turnout on Election Day. This is only about 10% of the total sample that we speak to, as people who take part in polls are more likely to vote than those who don’t. Again looking at the table above you can see this has a very marginal effect on most parties, but does remove a good chunk of those who say they were undecided how they would vote. That’s because those who say they definitely won’t vote, also say this when we ask them how they will vote or say they don’t know. Removing those very unlikely to vote then also has a minimal impact, with at most 1% change for party share.

METHOD NOTE:

After a review of findings of the UK polling enquiry, RED C has reviewed our approach to ensure we take account of any possibility of similar errors in Irish polling. Two issues were uncovered in UK, the first being internet polls not providing enough older voters and the second being too many politically interested younger voters. The older voter issue does not exist in Ireland. The key then is to ensure the younger people in our poll are representative and that we accurately predict their turnout. Having evaluated the voter profile of our likely voters vs. the two general election exit polls and post-election studies, we have made the decision to be more stringent in our likely voter analysis as this provides a profile more in line with those that have voted in the past. From today we will therefore move to only including those that say that they are very likely to vote (8-10) rather than likely to vote (4-10) used previously.

Finally for our published vote intention figures we remove those who are undecided (column 4 above) and base the result on all those who express a preference. This has the effect of assuming that undecided voters will vote the same way as those who are decided at this stage. In the past we used to re-allocate some of these undecided voters based on past vote. But we stopped doing this after the 2011 election as we found it did not improve our results. It is of course possible that a “shy voter” syndrome exists for one party or another, and people voting for that party are more likely to be undecided at this stage, but there is no major evidence for this at the present time. We will of course be monitoring this by other means as the campaign progresses, and may change how we look at the undecided voters if a clear shy voter bias emerges.

As you can see from the table above, the extra analysis that we conduct on our base or core data has very little impact on the poll figures, but we feel this is worth it in order to be as accurate as possible. The biggest change in the last poll was to increase support for Independent candidates by 2%. Fianna Fail and Labour also gained 1%, while Sinn Fein and Labour remained stable. We publish this table in every poll report we put online on our website. So if you don’t like the extra analysis we believe is worthwhile – you can always still see the core vote before any of this is taken into account.

Moving forward RED C is constantly looking at the way we analyse polls to ensure the best accuracy. This regular interrogation helped us to for instance predict the marriage referendum result with 100% accuracy, using a Wisdom of Crowds analysis method. Our sole aim to provide the most accurate and insightful read for our clients, and we will continue to strive to do so during this General Election campaign.